The Philippines’ Commission on Elections begins public source code viewing process

Update (25 October 2015, 11:56pm): COMELEC election worker Luie Guia responded to my Facebook posting of this article, clarifying that the “decision to have a source code review is, apart from gaining public trust, a requirement of law (source code must be made available to interested parties as soon as the supplier has been chosen)”. This is specified in Section 14 of Republic Act 8436 (amended by R.A. 9369).

“SECTION 14. Examination and Testing of Equipment or Device of the AES and Opening of the Source Code for Review. – The Commission shall allow the political parties and candidates or their representatives, citizens’ arm or their representatives to examine and test.

“The equipment or device to be used in the voting and counting on the day of the electoral exercise, before voting starts. Test ballots and test forms shall be provided by the Commission.

“Immediately after the examination and testing of the equipment or device, the parties and candidates or their representatives, citizens’ arms or their representatives, may submit a written comment to the election officer who shall immediately transmit it to the Commission for appropriate action.

“The election officer shall keep minutes of the testing, a copy of which shall be submitted to the Commission together with the minutes of voting.”

“Once an AES technology is selected for implementation, the Commission shall promptly make the source code of that technology available and open to any interested political party or groups which may conduct their own review thereof.“

On Monday October 12, COMELEC-approved reviewers will begin a grueling five-month process of reading the program source code for the Philippines’ automated election system (AES). This is a confidence-building measure intended to give the public an opportunity to understand, in detail, how each part of the system works.

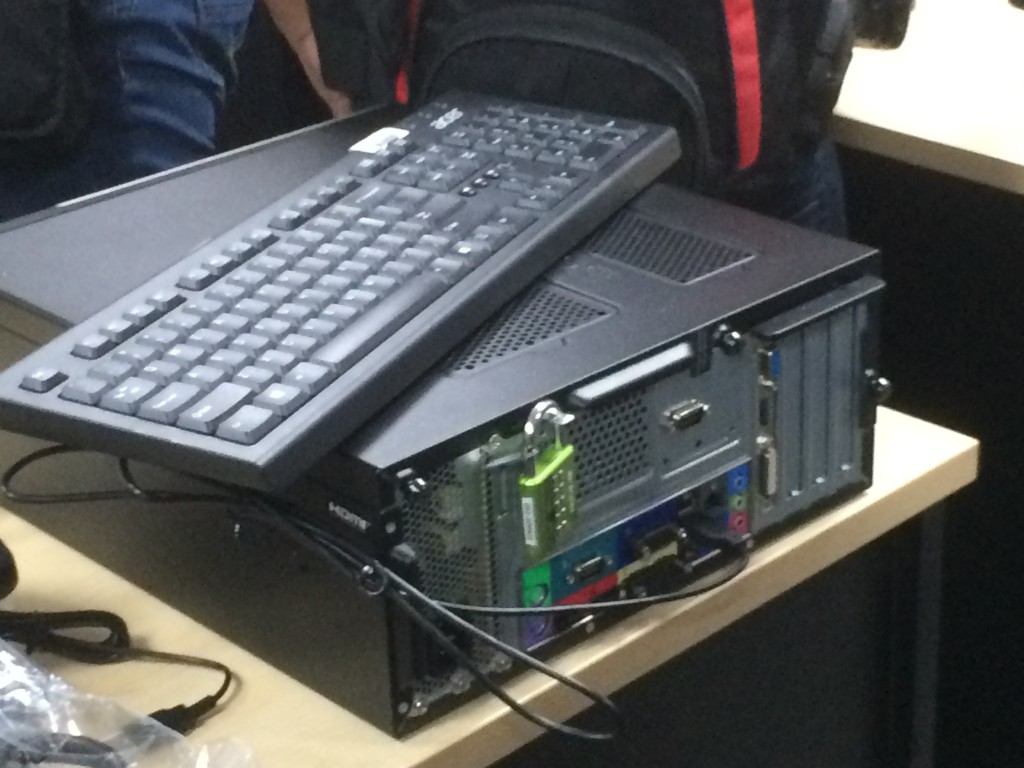

COMELEC has established a code review site at De La Salle University. There, reviewers from various political parties and the Parish Pastoral Council for Responsible Voting will be examining the program source code provided by technology supplier Smartmatic Inc., in a secure environment set up jointly by COMELEC and Smartmatic I.T. personnel. Eighteen-plus isolated workstations will be set up, running a derivative of a popular Linux distribution, with specially-compiled kernels to disable avenues for data exfiltration (no I/O ports, no LAN networking), and strict access controls applied for non-root reviewers’ accounts (password-secured LVM partitions, wiped clean at the end of each day, and reloaded with a clean environment and a copy of the Smartmatic code base at the start of the next one).

The public source code review is not, by itself, intended to formally certify that the automated election system supplied by Smartmatic, Inc. is fit for purpose. The COMELEC Technical Evaluation Committee, constituted by Republic Act 8436 (as amended by R.A. 9369), is mandated to

“…certify, through an established international certification entity to be chosen by the Commission from the recommendations of the Advisory Council … that the AES, including its hardware and software components, is operating properly, securely, and accurately,”

for which purpose they have chosen SLI Global Solutions, a Voting System Test laboratory based in the Colorado, USA. It is the outcome of a formal validation test suite, applied by SLI Global Solutions to the AES supplied by Smartmatic, could be subverted by a determined, well-resourced attacker. Which is curious, considering our history of “guns, goons, and gold;” clearly, the outcome of polls is a high-stakes exercise in more ways than merely winning an elective post. The review will be conducted to introduce reviewers to the minds of the designers, not to demonstrate how the system behaves under attack, or explore means to deter such attacks. The latter exploration should naturally follow after receiving information from the vendor about how the system is built. It is not enough to accept their say-so, that “this problem is addressed by this design decision”; if technical means are available to attempt to falsify those vendor claims, reviewers should make those attempts, and the outcome of those experiments should be documented for the entire team and for COMELEC to eventually disseminate to the public.

The following paragraphs describe how to get around these limitations, and perform a team “software archaeology” effort within the constraints of the code review environment, and are addressed to the COMELEC I.T. systems staff and civil society reviewers.

Analysis of a big system requires note-taking – a LOT of note-taking

The guided code walkthroughs will be reminiscent of scrum stand-up meetings in a software production environment – but without the pressure to deliver a product. Analyzing the behavior of each major subsystem – Elections Management server (EMS), Consolidated Canvas server (CCS), and vote counting machines (VCMs) – during the guided code walk-through will involve each reviewer interrogating the Smartmatic representative to understand the interaction of many, many code modules. While much of this information cannot be part of the public record (being proprietary design intent and implementation detail), this should still be part of the working documentation, equally available to every participant in the review process. Making this information easily accessible underscores the fact that this is a group effort to comprehend, rather than build, an automated election system.

- We can address the need to document what is being learned the hard way (using hand-written notes on COMELEC-provided commentary note sheets collected at the end of each day) or the easy way: Enable Ethernet LAN networking on all workstations, and reserve one of the work stations as a team wiki server which, alone, will not be wiped at the end of each working review day, and will contain commentary and notes from every participant, to be maintained for the duration of the review.

Static code analysis only gets you so far – you have to actually run the code to understand how it works (or doesn’t work) as the vendor Smartmatic claims.

- Simulate networked Election Management and Consolidated Canvas servers either a) in virtual machines, or b) as processes using loopback devices as simulated network adapters. Use brctl to create a bridge interface consisting of loopback device parts, to serve as a virtual, well, bridge. Each server (EMS, CCS) might be able to execute as a separate Java process on a single workstation. Simulating an AES client-server network is simplified, of course, by not disabling Ethernet LAN networking inside the review center, and using separate review center machines as platforms to proxy for the real servers.

- Analyze network traffic using tcpdump, or Wireshark to gain insight on protocol handling during data exchange between subsystems. Verify that packets never traverse the network unencrypted – whether the packets contain ballot records, or status or control messages. Verify that same messages (e.g. identical ballot submissions) are not susceptible to replay attack, that sort of thing. This works whether you’re listening to a real Ethernet device, or a virtual bridge.

- Insist on gaining access to at least one vote counting machine, hooked up to the simulated AES servers in the review center, to be able to analyze the traffic between VCMs, the consolidated canvas server, and election management server.

- Find out how to confirm that the binary (binaries?) from a trusted build of the vote counting machine matches that on each of the VCMs to be deployed on election day. Determine whether the procedure is resistant to an attacker injecting an unauthorized binary, either by software design or by hardware measures (e.g. by not exposing processor JTAG pads on production boards that can be reached using pogo pin jig or on-board surface connectors). Trust procedures that have been demonstrated, which efficacy you can see for yourself.

Confirm that compiled binary hashes are not spoofable by known-prefix attack

- Be bothered by the assertion that “it is possible to confirm that the binaries are authentic by comparing the hash of a binary from a known-good build with the hash of a binary executing on a working system”. It is known that some older hashing algorithms, including the MD5 hash, and recently, the SHA1 hash algorithm, are susceptible to what is called a chosen prefix attack: Two different binaries A and B, which result in different MD5 hashes H(A) and H(B), can be made to generate the same hash H(A) by modifying binary B, H(A) = H(B+suffix). Ask and find out whether Smartmatic at least use two different hash algorithms to obtain a signature (and, please, let none of the algorithms be MD5 or one of the similarly weak hash algorithms). Consult with a cryptanalyst at a university near you to find out how this might be done with binary blobs such as compiled firmware.

- Also determine how this confirmation of binaries’ authenticity is carried out during each test and deployment phase. Can we be absolutely certain that identical copies of the firmware, generated by a trusted build process, are distributed across all the vote counting machines, in all locations across the archipelago? How is that assured.

Until accredited reviewers attest otherwise on our behalf, I’ll take the claims in this article [dead link] (or here) as just a strong bit of marketing. Do us all proud, you geeks!